In e-commerce, the long tail — thousands of niche products — can account for a significant share of revenue.

Yet, traditional keyword search hides them. Queries that don’t match exact keywords either show irrelevant results or lead to a “no results” page.

Shoppers increasingly use natural language (“lightweight summer footwear”) or loosely related phrases. If your search can’t understand them, you lose product discovery, conversions, and revenue.

Vector search changes this. It represents queries and products as high-dimensional semantic vectors, measuring meaning instead of exact word matches. This lets you:

- Match vague or rare queries to relevant products

- Suggest alternatives when no direct match exists

- Embed behavioral signals for personalized recommendations

The result: customers discover more products, buy additional items, and stay engaged longer.

Why Long-Tail Discovery is a Growth Lever

- Unlock hidden inventory — Niche SKUs get surfaced in relevant searches.

- Eliminate zero-result frustration — Show semantically similar products even without exact keyword matches.

- Enable natural-language queries — Understand intent beyond keywords.

- Power cross-sell & upsell — Recommend accessories, premium versions, and bundles based on semantic similarity.

OpenSearch Vector Engine: Lower Costs, Higher Recall

OpenSearch has evolved into a leading open-source vector database with innovations that make large-scale vector workloads practical:

- Disk-based vector search

- 32× compression with binary quantization reduces memory from ~3 KB to ~96 bytes per vector.

- Two-phase search: compressed index scan + full-precision rescoring.

- Cuts costs while maintaining high recall — ideal for massive catalogs.

- Memory-optimized workload modes

- In-memory for lowest latency, on-disk for cost efficiency.

- Tunable compression levels (1×–32×) balance speed vs memory usage.

- From OpenSearch 3.1, load vectors on demand from disk.

- GPU-accelerated indexing

- 9.3× faster index builds via NVIDIA CAGRA.

- 3.75× lower indexing cost vs CPU-only builds.

- CPU load cut by 2.5×, doubling ingestion throughput.

- Lucene 10 and OpenSearch 3.0 performance

- ≈10× faster search, ≈2.5× faster vector search vs 1.x.

- ~90% lower latency vs OpenSearch 1.3.

- SIMD vectorization + smarter query planning = 87% faster full-text queries.

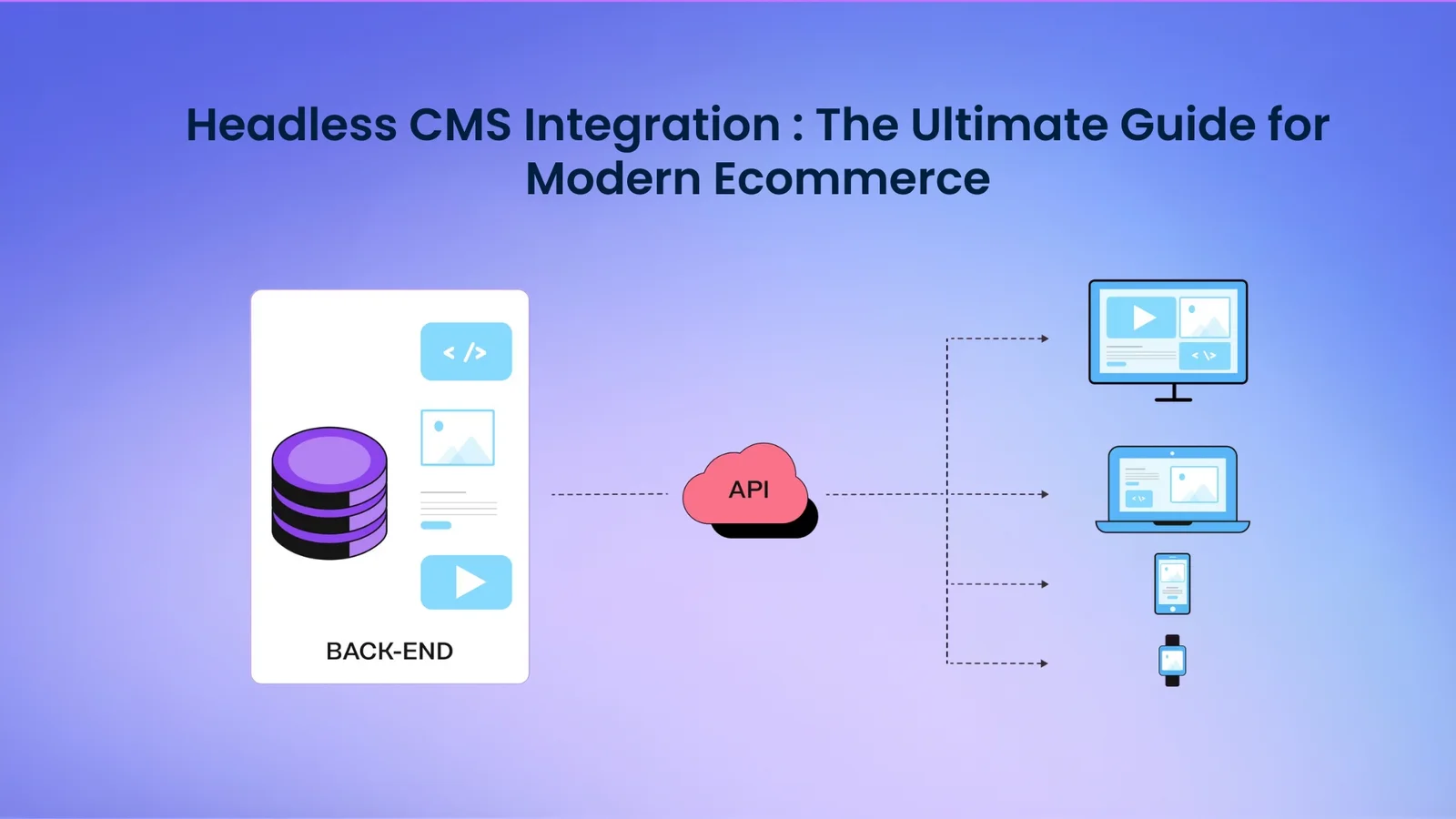

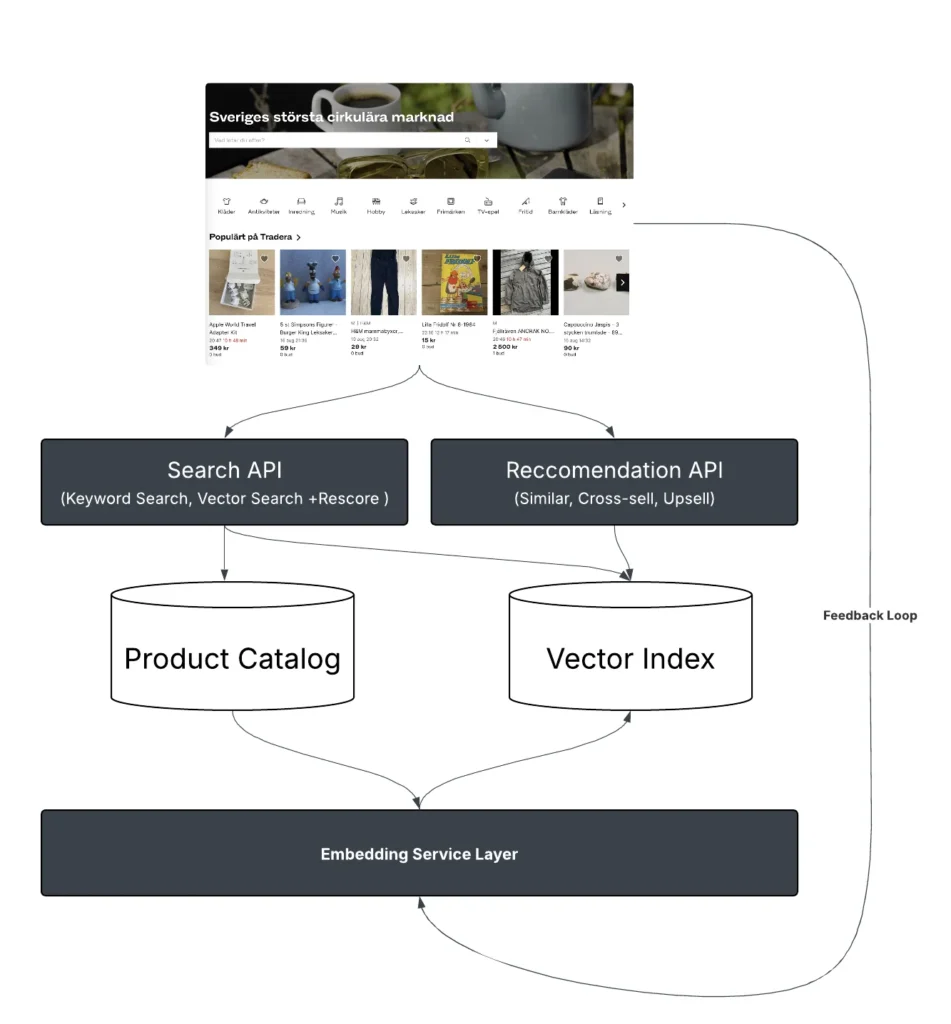

How Vector Search Fits Your Architecture

- Embedding service – Convert product data and queries into dense vectors (256–1024 dims) via models like BERT, Sentence-Transformers, or CLIP (for images).

- Vector index – Store in

knn_vectorfields. Configureon_diskmode and compression level for long-tail catalogs. Use HNSW or GPU-built CAGRA graphs. - Search pipeline – Embed query, run

knnsearch, rescore with full-precision vectors, combine with keyword/filters, apply business boosts. - Recommendation layer – Use same embeddings for “related items” and “customers also bought” features.

Business Impact: The Numbers

Long-tail lift — Relevant results for vague queries like “toys for small children” increase discovery and niche SKU sales.

Cross-sell gains — Similarity-based recommendations surface accessories and premium products naturally.

| KPI | Impact | Source |

|---|---|---|

| Conversion rate | +26% from targeted recommendations | Salesforce |

| Average order value | +50% from personalized cross-selling | Salesforce |

| Likelihood to buy | 80% of customers more likely with personalization | Epsilon |

| Search performance | ≈10× faster search, ≈2.5× faster vector search | OpenSearch 3.0 |

| Latency | ~90% lower than OpenSearch 1.3 | OpenSearch 3.0 |

| Indexing speed | 9.3× faster with GPU | OpenSearch GPU benchmarks |

| Indexing cost | 3.75× lower with GPU | OpenSearch GPU benchmarks |

Real-World Applications

- Text embeddings for discovery – Natural-language skincare search increased visibility of niche, cruelty-free products.

- Image embeddings for style match – Home-decor store recommended visually similar items to boost cross-category sales.

- Cart-based recommendations – Electronics retailer suggested compatible accessories in real time, increasing basket size.

RBMsoft Advantage

We deploy vector search at scale for retailers with catalogs exceeding 50M+ SKUs, integrating:

- GPU-accelerated indexing pipelines

- Custom text + image embedding services

- Real-time personalization rules

- Hybrid search (vector + keyword) with business boosts

Our architectures reduce infra cost while improving discovery, conversion, and cross-sell performance.

Conclusion & Next Steps

Vector search turns search into semantic discovery.

OpenSearch innovations now make it affordable, fast, and scalable for large catalogs.

Next step: Audit your current search, identify high-value long-tail queries, and integrate vector-based retrieval for discovery and recommendations.

Book your free consultation with RBMsoft to see how we can deploy OpenSearch vector search to grow your long-tail revenue and cross-sell performance.