User experience isn’t something you can just slap on at the end anymore – it’s what actually drives revenue now. The faster people find what they want, the more they buy. Pretty simple math, but a lot of platforms still get this wrong.

Here’s what’s interesting: search is usually the hidden problem nobody talks about. Customers hit your site, type something in the search box, get garbage results, and leave. You’re probably losing sales every day without even realizing it.

The real question for anyone running a serious platform isn’t whether better search matters (it obviously does). It’s how you build search that actually scales with your business and doesn’t break when traffic spikes during Black Friday. That’s where Elastic search gets interesting. It’s fast, handles massive datasets without choking, and gives you the flexibility to build features that actually matter to customers. Plus it doesn’t require rebuilding your entire infrastructure to make it work.

We’ll walk through how this stuff actually works, why it makes such a difference for user experience, and what kind of results you can expect. There are some solid case studies from companies that went from struggling with search to making it a competitive advantage.

Elastic search Fundamentals and Mechanics

Understanding What Elastic Search Is

Elastic search is built on Apache Lucene, which is the proven search engine that powers half the internet. But where Lucene is just the core engine, Elastic search wraps it with all the distributed computing and API layers you need to actually use it at scale.

Here’s what matters if you’re not a search engineer:

Built on solid foundations – Lucene handles the hardcore indexing and ranking logic. Elastic search adds the clustering, replication, and REST APIs that make it usable without a PhD in computer science.

Scales horizontally – Your data gets split into shards and spread across multiple servers. When you need more capacity, you add more machines. When servers crash (and they will), replica shards keep everything running. No single points of failure.

Real-time updates – Unlike older systems that batch everything overnight, new products or price changes show up in search results within seconds. That’s crucial when your catalog changes constantly.

This isn’t just about having a faster search box. It’s infrastructure that directly affects whether people buy from you or bounce to a competitor. Good search reduces the engineering headaches of building something custom while actually improving business metrics.

How Elastic search Works in E-commerce

Here’s how Elastic search handles e-commerce workloads effectively. It indexes product data into distributed shards, enabling rapid search performance across large catalogs. The Query DSL combines full-text search capabilities with filtering and relevance boosting, while aggregations power both faceted navigation and business analytics.

This architecture ensures customers locate products quickly, which translates to better conversion rates and stronger overall site performance.

Shards split data for faster lookups

Rather than loading your entire product catalog onto a single database server, Elastic search distributes the data across smaller units called shards. These shards operate on separate machines, allowing multiple servers to process search queries simultaneously.

This distribution transforms performance significantly. A search across 5 million products becomes five parallel operations across 1 million products each, with different servers handling each segment. Response times remain consistent as catalogs expand, and the system maintains availability even when individual nodes experience failures.

Query DSL merges search, filters, and boosts

Elastic search gives you complete control over your search results. You can boost expensive items, hide out-of-stock products, and make product titles count more than descriptions.

This flexibility means your search results actually serve your business goals. High-margin products can appear first, seasonal items get temporary boosts, and you can adjust everything without needing a developer. Instead of simple alphabetical matching, you get search results that drive revenue. This means your search actually works for your business. You can put your best products first, highlight seasonal stuff, and change everything on the fly – no programmer needed. Instead of just showing results alphabetically, you show what makes you money.

Aggregations enable facets and analytics

Those product filters on the left side of most e-commerce sites? That’s aggregations working in real-time. Elastic search counts how many products match each brand, price range, or color while it’s running the main search query.

The same system provides valuable business intelligence. Which search terms convert best? What products are trending? Where are users hitting dead ends? All of this comes from the same queries powering the customer experience.

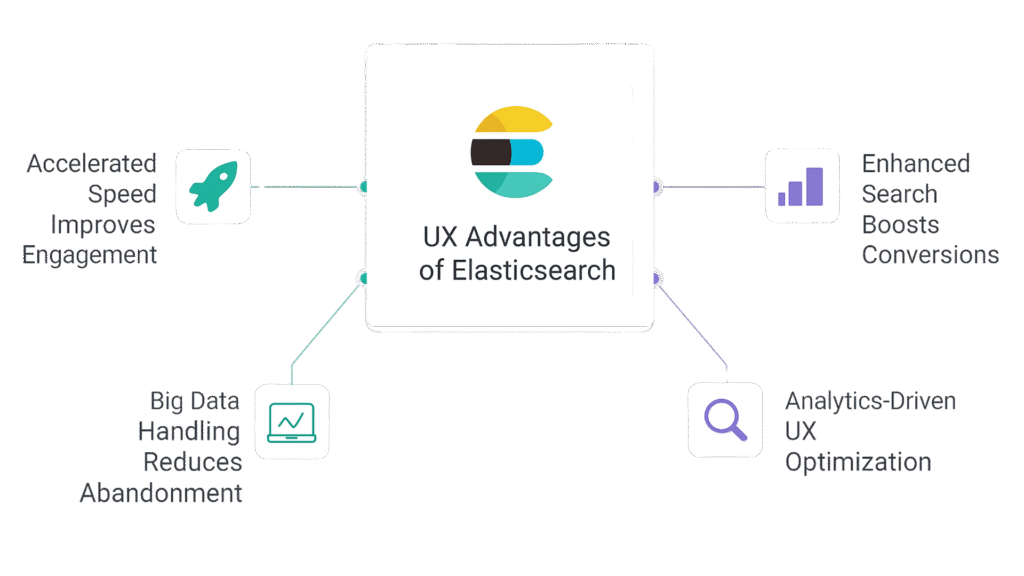

UX Advantages of Using Elastic Search

Elastic search improves user experience in three main ways. Search results load fast enough to keep users engaged. The matching gets more relevant, which helps with conversions. And the personalized suggestions make people buy more stuff.

Accelerated Speed Improves Engagement

1. Sub-second responses change user behavior

Elastic search returns search results in milliseconds, even with millions of records to search through. The system spreads data across multiple nodes and processes queries in parallel. It uses in-memory operations and Lucene’s indexing algorithms to make this happen.

Fast search changes how people use your site. Users browse more products and switch between categories without waiting. They don’t get frustrated with slow loading, so they stick around longer. There’s a big difference between waiting half a second and waiting barely anything – users notice and behave differently.

For users, this speed difference is dramatic. No more staring at loading spinners or wondering if their search actually worked. Pages feel instantaneous, which keeps people engaged instead of clicking away. Research shows that when search times drop below one second, users perceive the wait as 76% shorter – essentially making it feel instant.

Fast search directly impacts the bottom line. People browse more products, click more results, and stick around through checkout instead of abandoning their cart out of frustration.

2. Distributed architecture maintains performance under load

When traffic spikes during sales events, traditional search systems often buckle. Elastic search distributed design spreads queries across multiple nodes, so additional load gets distributed instead of creating bottlenecks.

The system automatically balances work across available resources, and you can scale horizontally by adding more nodes during peak periods. This means consistent performance whether you’re handling 100 searches per minute or 10,000.

Enhanced Search Relevance Boosts Conversions

1. Field boosting and synonyms APIs fine-tune results to match intent

Not all product data matters equally. Product titles usually indicate purchase intent better than descriptions buried in specifications. With Elastic search field boosting, you can weight different fields so the most relevant matches appear first.

The synonyms API handles the language gap between how customers search and how products are listed. When someone searches “sneakers” but your catalog calls them “athletic footwear,” synonyms mapping ensures they find what they want. This eliminates those frustrating zero-result pages that send customers to competitors.

2. Personalized suggestions raise average order value and conversion rates by up to 77%

Real-time personalization turns the search box into a sales channel. Based on browsing history, purchase patterns, and trending products, Elastic search can surface relevant suggestions as users type.

This isn’t just better UX – it’s measurable revenue impact. When customers discover products they weren’t initially looking for, average order values increase. Companies implementing personalized search suggestions typically see conversion rate improvements of 50-77%.

Big Data Handling Reduces Abandonment

1. Real-time indexing supports dynamic catalogs with minimal lag

E-commerce catalogs change constantly – prices update, inventory fluctuates, new products launch. Elastic search real-time indexing means these changes appear in search results within seconds, not hours or days.

This prevents the classic problem where customers click on products only to find they’re unavailable or mispriced. Keeping search results synchronized with live inventory maintains trust and reduces the friction that leads to abandoned purchases.

2. 72% fewer abandoned carts when users find products on first search attempt

When shoppers can’t find what they want quickly, they leave. It’s that simple. Elastic search relevance tuning and query capabilities help ensure users find the right products on their first search attempt.

Data from real implementations shows up to 72% fewer abandoned carts when search accuracy improves. Better search doesn’t just improve user experience – it directly prevents lost sales.

Analytics-Driven UX Optimization

1. Search data reveals customer intent

Every search query contains valuable intelligence about what customers actually want. Elastic search captures this behavioral data, showing which terms are popular, which products get clicked most, and where searches fail to produce results.

This insight drives inventory decisions, marketing strategies, and product development. High-converting search terms can inform PPC campaigns. Popular but unavailable products signal restocking opportunities. Failed searches reveal gaps in the catalog or problems with search configuration.

2. Continuous monitoring enables rapid improvements

Search performance isn’t static. Customer behavior shifts, catalogs evolve, and business priorities change. Elastic search provides real-time metrics on search latency, click-through rates, and conversion funnels.

Teams can run small experiments – adjusting relevance rules, testing new synonyms, or modifying product boosts – and immediately measure the impact. This iterative approach means search quality improves continuously rather than requiring major overhauls.

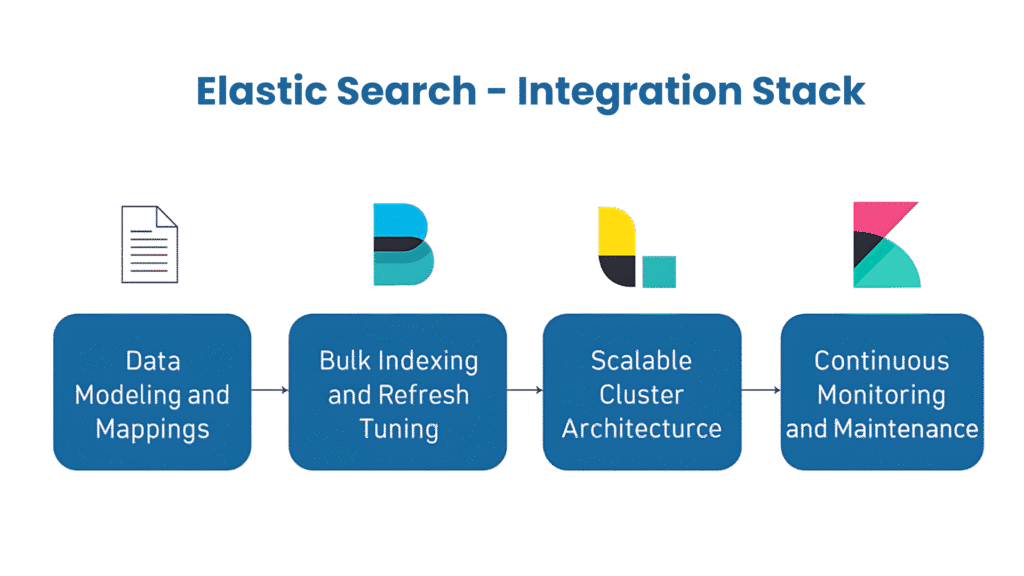

Integration Best Practices

Effective Elastic Search integration involves precise data modeling and mappings, optimized bulk indexing with refresh tuning, a scalable cluster architecture, and continuous monitoring and maintenance—ensuring fast, reliable, and scalable search performance for eCommerce platforms.

Data Modeling and Mappings

1. Explicit field mappings prevent indexing problems

While Elastic search can guess field types automatically, relying on dynamic mapping often creates headaches down the road. Different data types require different handling – full-text search fields need analysis and tokenization, while exact-match fields used for filtering should remain untouched.

Defining explicit mappings upfront ensures:

- Text fields get proper analysis for search relevance

- Keyword fields enable precise filtering and sorting

- Numeric and date fields support range queries accurately

- Field types stay consistent as new data gets indexed

Taking control of mappings eliminates the guesswork and prevents weird edge cases that break search functionality.

2. Copy to fields simplify multi-field searches

When a single search query should span multiple fields — for example, product name, description, and SKU — copy to provides a clean and efficient solution. Instead of running separate queries on each field, you can copy their values into one composite search field.

This approach offers several benefits:

- Performance gains by reducing query complexity.

- Consistent scoring since all relevant text resides in one field for relevance calculations.

- Simplified maintenance because boosting and analyzer settings can be applied once instead of being duplicated across multiple fields.

For businesses managing large, evolving catalogs, copy_to becomes a critical tool to keep the search index both lean and highly relevant without sacrificing accuracy.

Bulk Indexing and Refresh Tuning

1. Leverage Bulk API with multithreaded clients to maximize throughput

If your indexing is crawling at 100 documents per minute, you’re probably sending them one at a time. That’s painfully slow.

Use Elastic search bulk API instead. Send 1000-5000 documents at once, depending on their size. But don’t stop there – run multiple bulk requests at the same time using 4-6 threads.

This keeps your system constantly busy. While one batch processes, the next one’s already waiting. Teams that switch to this approach often see indexing jobs that took days now finish overnight. Your business gets faster results, and your development team stays sane.

2. Adjust index.refresh_interval to balance indexing speed against search freshness

During heavy indexing sessions, crank up that refresh_interval setting. The default 1 second refresh is insane when loading millions of records. Bumping it to 30 seconds or disabling it completely until the job finishes gives your CPU a break it desperately needs.

This approach prevents performance sacrifices during heavy indexing while maintaining the search freshness applications need once updates go live.

Scalable Cluster Architecture

1. Plan sharding strategy to match expected query load and data volume

Many projects fail because teams start with just one shard, thinking “we don’t have much data yet.” Six months later, performance crashes and they’re forced into expensive reindexing that could have been prevented.

Plan for at least three times your current data size. If you have 10GB today, design for 30GB. Have 100 million documents? Plan for 300 million. You might have extra shards at first, but that’s better than getting stuck with slow performance later.

Keep each shard between 10-50GB. Smaller wastes resources, larger slows down searches. Most teams find 20-30GB per shard works best. Good planning now saves you from painful reindexing later and keeps things running smoothly as your business grows.

2. Employ replica shards for failover and high availability

Replicas aren’t optional, despite what budget-conscious managers might say. These exact copies of primary shards serve two critical purposes:

- High availability – Node crashes happen. When they do, replicas jump in immediately so searches keep working. No downtime, no angry users.

- Query load balancing – Instead of hammering one node with all the search requests, replicas spread the work around. More nodes handling queries means faster response times when traffic gets heavy.

How many replicas you need really depends on what you’re building. If downtime costs money (and it usually does), start with one replica for each primary shard. if you are running a high-traffic site, you’ll probably want more.

Either way, you’re covered when hardware decides to quit on you.

Continuous Monitoring and Maintenance

1. Track performance metrics via Elastic search APM and Core Web Vitals integration

Slow searches are bad. Not knowing WHY they’re slow is worse. Setting up monitoring from day one prevents those 3 AM emergency calls that nobody wants.

Elastic search APM shows you what’s actually happening under the hood – how long queries take, how fast data gets indexed, and whether your servers are sweating. Hook it up with Core Web Vitals and you can see how backend performance affects what users actually experience on your site.

This setup catches problems before users notice them. Query times suddenly jumping up? Could be a wonky filter or a server that’s getting hammered. Having this visibility means fixing issues before they become emergencies instead of scrambling when everything’s already broken.

2. Automate index lifecycle policies to manage storage and rollovers

Data grows – that’s reality. Without proper management, indexes balloon into massive, slow-searching monsters that nobody wants to deal with.

Elastic search Index Lifecycle Management (ILM) handles this automatically with policies based on size, age, or performance requirements. A typical product catalog setup might automatically roll over to new indexes at 50GB, move older indexes to cheaper storage, then delete them after set retention periods.

This keeps storage costs predictable, ensures queries run against optimally sized indexes, and prevents performance drops from bloated datasets. With automated lifecycle policies, search stays fast and storage stays controlled without constant manual intervention.

Case Studies of Enhanced Experiences

1. Dell Global Commerce Platform

Scope:

Dell deployed Elastic Search to power its global commerce platform across more than 60 markets and 21 languages. The platform indexes over 27 million documents—including product listings, drivers, knowledge-base articles, manuals, videos, and rich metadata like specifications, stock, pricing, and media assets.

Challenge:

Their legacy search system was creaking under the strain. It lacked multi-tenant support, struggled with indexing and horizontal scaling, and couldn’t deliver the speed or relevance users now expect. Page-load delays and slower query responses were negatively impacting conversions, especially in global, high-volume scenarios.

Implementation Highlights:

- Dell standardized on a two-cluster architecture—one for search and one for analytics. The analytics cluster indexes user click behavior to inform real-time relevance tuning and experimentation.

- They built language-specific pipelines using Elastic’s analyzers, synonym matching, stemming, stopword filtering, spell-check, and a custom “catch-all influencer” that enriches queries with content and taxonomy context for precise intent mapping.

- A “virtual assistant” interface gives users instant feedback—like faceted refiners for screen size or processor type—before executing a full search.

- Elastic’s multi-index capability also enabled feature testing through A/B experimentation, fine-tuning relevance strategies before broader rollout.

Impact Metrics:

- Server footprint shrank by 25–30%, while infrastructure efficiency improved markedly.

- Revenue per visit, click-through rates, average order value, and conversion rates all increased (exact figures kept internal).

- Customer satisfaction rose, especially after search latencies were trimmed to under 300 ms.

2. Docker Hub Search

The Challenge: Docker Hub handles tens of millions of container searches daily. Users need to quickly find the right images from a massive catalog that’s constantly changing with new uploads, updates, and download counts.

How They Built It: Docker used a dedicated Elastic search cluster with backup shards for reliability. They set up smart ranking that automatically promotes popular and official images without manual work. The system uses download counts and quality signals to push the best results to the top.

Results Search got much faster – from over 1 second down to under 150 milliseconds. Users found what they needed more often, with 20% more people clicking the first result. The system now handles massive search volume while staying fast and relevant.

3. Guardian real-time newsroom analytics

Use-case:

The Guardian serves upwards of 5 million daily readers across its global digital properties. To deliver timely, relevant content and stay ahead in a fast-paced media environment, they built Ophan — an in-house analytics platform powered by Elastic search. It enables editors, journalists, and content strategists to make decisions based on real-time audience engagement.

Deployment facts:

- Elastic search ingests 40 million documents per day, powering Ophan’s real-time insights with zero lag.

- Editors access live dashboards showing audience behavior, traffic sources, and content performance — enabling swift editorial adjustments like updating headlines or boosting trending stories on social platforms.

Results:

- Page views increased thanks to dynamic headline optimization, real-time feedback, and precise promotion strategy driven by Ophan.

- Performance issues are detected dramatically faster. What previously took hours to identify now triggers alerts within seconds, thanks to real-time monitoring through Elastic search.

This case demonstrates how not just search, but analytics-driven decision-making built on a scalable search platform, can radically change how real-time operations run — keeping content timely, relevant, and performant in a media world where every second counts.

Why Partner with RBM Software

AI/ML Expertise for Personalization, Pricing & Fraud Prevention

RBM Software doesn’t just stick to basic search and recommendations. RBM AI systems actually watch how customers behave and adapt on the fly – suggesting products people actually want, adjusting prices when demand shifts, and catching sketchy transactions before they become problems.

What makes this work is the speed factor. While competitors are still crunching yesterday’s data, RBM’s systems are already responding to what’s happening right now. E-commerce sites using their tech see better conversion rates and fewer headaches from fraud attempts.

Proven AR/VR, Voice Commerce & PWA Expertise for Seamless UX

The RBM team has shipped some pretty cool stuff – AR features that let customers see how furniture looks in their living room, voice shopping that actually understands what people want, and web apps that feel as smooth as native mobile apps.

Here’s the thing: customers don’t care about the technology behind it. They just want shopping to work whether they’re using their phone, talking to Alexa, or trying on virtual sunglasses. RBM gets this and builds accordingly.

Flexible Engagement: Hourly, Project, or Embedded Teams

Not every business needs the same type of help. Maybe you need a quick consultation to fix something specific. Maybe you’ve got a big project with a hard deadline. Or maybe you want to bring in specialists to work alongside your existing team.

RBM handles all these scenarios. No forcing you into packages that don’t fit. No minimum commitments that eat your budget. Just the support you actually need, structured how you need it.

U.S. Leadership with Global Talent for Faster Delivery

RBM’s leadership sits in the U.S., which means they get American business culture and market expectations. But their development teams are spread across different time zones, so work keeps moving even when the main office goes home.

This setup isn’t just about cost savings – though that’s nice. It’s about keeping projects moving without burning out any single team. Code gets written, bugs get fixed, and deadlines get met.

Final Takeaway

When your search works well, customers find what they want and buy it. When it doesn’t, they leave for a competitor’s site. Elastic search helps you build the kind of search that keeps people shopping instead of clicking away, even during your busiest sales periods.

Companies like Dell, Docker, and The Guardian aren’t using this tech because it’s trendy. They’re using it because it delivers results you can measure – more sales, happier customers, fewer support tickets.

RBM Software knows how to take Elastic search from “installed and running” to “actually driving business results.” Whether your current search is limping along, you’re worried about handling peak traffic, or you want to add AI features that customers actually use, they’ve done it before.

Want to see what better search performance looks like for your business? Connect with RBM Software today and see how our solutions can transform your e-commerce performance.