Generative AI in Ecommerce : Unlock the Future of Business Success

The use of LLMs is rising and can be inconsistent. Many organizations across industries are seeking ways to extract maximum value from their vast information repositories. Retrieval Augmented Generation (RAG), when combined with Large Language Models (LLMs), represents a revolutionary approach to knowledge management, search functionality, and intelligent automation. This innovative architecture transforms how businesses interact with their data, enabling more intuitive information discovery and dynamic content generation. As adoption grows, Retrieval Augmented Generation is quickly becoming the backbone of scalable, accurate, and context-aware AI solutions.

Traditional search architectures have mostly used keyword matching, simple filters, and basic relevance algorithms. Such systems, typically part of the legacy system database technologies like PostgreSQL, MySQL, etc., will find it extremely difficult to cope in modern enterprise environments:

These limitations affect multiple domains, i.e., from eCommerce platforms to internal knowledge bases, customer support systems, and content management solutions. The pace of digital transformation has only highlighted the inadequacies of legacy search systems.

LLM-powered search represents a fundamental shift from keyword matching to semantic understanding. LLMs such as OpenAI’s GPT series have demonstrated remarkable capabilities in understanding and generating human-like text. When used in search systems, LLMs can understand user queries much better in terms of context, intent, and nuance.

However, LLMs have clear limitations. They depend on training data, not having the latest information or specific content related to any domain. This is where RAG comes in:

Retrieval Augmented Generation (RAG) is a machine learning-based technique that augments LLMs by combining retrieval-augmented methods with generation-based capabilities. In other words, RAG is a framework that enhances LLMs by integrating them with external data sources.

Retrieval Augmented Generation is useful for tasks like answering users’ queries and developing content because it allows generative AI systems to leverage external knowledge sources to generate more accurate and context-aware responses. Search retrieval methods like semantic search are more commonly used to respond to user inputs and provide better results.

Retrieval Augmented Generation (RAG) functions through two primary components working in seamless coordination. Here’s how it works:

This technical architecture translates directly into business value across multiple functions. By combining the retrieval and generation components, organizations can bridge the gap between their existing knowledge repositories and the powerful capabilities of modern AI. Let’s explore the specific benefits this architectural approach delivers to enterprises.

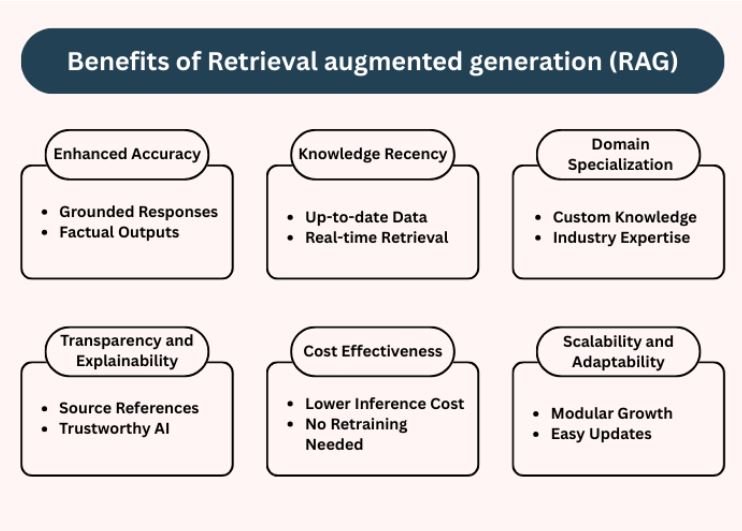

The Retrieval Augmented Generation (RAG) architecture delivers several significant benefits over both traditional and standalone LLMs:

Retrieval Augmented Generation (RAG) fundamentally tackles the “hallucination” problem seen in standalone LLMs by grounding the model’s responses in factual, retrieved data. By supplying relevant external information to the LLM at query time, RAG ensures that the generated output is not only more accurate but also contextually aligned with the user’s question. This leads to more reliable and trustworthy responses while adhering to system instructions and safety constraints.

Retrieval Augmented Generation (RAG) systems can incorporate information beyond the LLM’s training cutoff, providing up-to-date responses. This is particularly valuable for domains with rapidly changing information, such as:

Organizations can customize Retrieval Augmented Generation (RAG) systems to become experts in specific domains without expensive model retraining. Linking LLMs with tailored knowledge bases makes it possible for enterprises to manufacture AI-powered assistants specialized in their domain, products, or services, thus making them proficient.

Recent benchmarks show that domain-specific Retrieval Augmented Generation (RAG) implementations can outperform fine-tuned models in specialized fields while requiring significantly less computational resources and development time.

Unlike LLM outputs that terminate in a “black box,” RAG systems provide footnotes and references outlining the source documents that were referenced in crafting their answers. Such transparency is important in building trust with users, in addition to fulfilling compliance needs for regulated businesses.

Enhancements in retrieval accuracy and model metrics help in trimming the operational expenditure to run LLMs and maximize ROI from implemented AI investments. Apart from this, new information can be added to the knowledge base without retraining the LLM.

RAG architectures have cost-effective scales with increasing data quantities and adapt to new data without undergoing a total system overhaul. Knowledge changes over time in an organization, and the retrieval part constantly adds new material without retraining the generation part.

While Retrieval Augmented Generation (RAG) is transforming various industries, eCommerce stands out as one of the most impactful use cases due to its unique challenges:

Modern eCommerce catalogs are populated with thousands of products alongside intricate specifications, attributes, and user reviews. Traditional search fails to comparatively analyze and understand nuanced queries like “a comfortable office chair for lower back pain.”

RAG systems interpret product information alongside descriptions, reviews, and customer feedback to deliver intelligent results. According to Salesforce Commerce Cloud data, 53% of retailers were using customer service agents to order on behalf of customers.

Customer service in eCommerce often gets overwhelmed with specific, product-related questions. RAG-powered assistants can answer product-related questions such as, “Is this phone case compatible with iPhone 16 Pro Max?” by retrieving relevant documentation in real-time. In addition to the customer’s previous purchases or support history, branded assistants can tailor personalized support, including comprehensive product comparisons, on a multi-listing basis.

RAG elevates the already existing level of personalization in eCommerce, providing a new source of intelligence. Rather than suggesting vague “popular products,” RAG focuses on specific customer data and generates custom-tailored suggestions using past purchases, buying habits, as well as interests.

A report by Accenture found that 91% of consumers are more likely to purchase from brands that recognize, remember, and provide relevant recommendations and offers.

While RAG presents several advantages, organizations face several challenges in implementation:

Retrieval Augmented Generation (RAG) systems require high-quality, well-structured data sources to function effectively. Many organizations struggle with fragmented data, inconsistent formatting along with information silos.

To solve these issues, initiate the entire data governance framework, develop automated data cleansing and normalization pipelines, and establish continuous data quality monitoring.

As the amount of data continues to grow and the intricacy of the queries escalates, ensuring quick response times becomes even more difficult.

To tackle this problem, tiered caching systems that store frequently accessed information can be utilized, combining search strategies like BM25 alongside vector similarity.

Ensuring that the retrieved data is pertinent to the user’s intent remains one of the hardest tasks for sophisticated user intents or ambiguous queries.

Fine-tuning embedding models on domain-specific data can be of help, along with the essential feedback loops that capture user interaction. Microsoft Research found that domain-adapted embedding models can significantly improve retrieval precision compared to general-purpose models.

Effective Retrieval Augmented Generation (RAG) models require significant computational resources for retrieval and generation. Maintaining a large, indexed knowledge base demands considerable storage resources. Proper planning of resources and costs must be done to ensure optimum utilization of both.

Large Language Models and Retrieval-Augmented Generation continue to evolve rapidly, unlocking new possibilities beyond traditional search capabilities:

RAG is not just limited to text search; multi-modal Retrieval Augmented Generation (RAG) unlocks new capabilities for content generation and processing from various data types like images, audio, and even video.

Imagine searching for a product by uploading a picture instead of typing a description, or getting customer support by sharing an image of a damaged item along with a message. This multi-format intelligence is already reshaping how users interact with digital platforms.

RAG will continue to utilize the user-specific data knowledge, allowing them to provide personalized responses across various use cases like content generation, product recommendation in eCommerce, etc.

Retrieval Augmented Generation (RAG) is becoming proactive, resembling a digital assistant more than an AI model. Future RAGs will be able to anticipate users’ thoughts and interrelate diverse sources of extensive knowledge within sensible action pathways, resulting in smart decision-making and execution for optimal productivity.

For example, we can expect autonomous drafting of follow-up emails or meeting reports to be generated with little manual intervention.

Forward-thinking organizations use Retrieval Augmented Generation (RAG) to unify knowledge across departments such as Sales, support, marketing, and the product divisions of forward-looking organizations, seamlessly connecting to an advanced ecosystem. This not only improves internal decision-making but also enhances customer experience.

RBM Software has established itself as a leader in implementing Retrieval Augmented Generation (RAG) systems across diverse industries, specifically eCommerce, through a combination of technical expertise and flexible engagement models:

RBM doesn’t just drop in a solution; rather, we take the time to understand your systems, data, and business goals. Our process begins with a thorough assessment and strategy phase, ensuring every decision aligns with your objectives. Then, we move into architecture design, building scalable, microservices-based systems designed specifically for your use case.

Whether you need extra AI talent to boost your team, want a turnkey solution with clear timelines and costs, or prefer a flexible, evolving engagement, RBM offers it all. Our models include staff augmentation, fixed-scope projects, time-and-materials billing, and complete quality assurance support.

RBM has hands-on experience across industries. From improving product discovery and support in eCommerce to optimizing compliance and documentation workflows in manufacturing, finance, and healthcare, we’ve seen it all and know how to build systems that work in the real world.

RBM isn’t just building Retrieval Augmented Generation (RAG) systems. We’re helping companies reimagine how knowledge is discovered, used, and shared.

Ready to transform your organization with LLM-powered search and Retrieval Augmented Generation (RAG)? RBM Software offers a comprehensive assessment of your current capabilities and challenges, providing a clear roadmap to implementation success.

Our team of experts specializes in transforming legacy systems into modern, AI-powered platforms through scalable, microservices-based architectures. Our approach combines offshore development efficiency with cutting-edge AI expertise, delivering world-class solutions that scale globally without compromising quality.

Schedule a free consultation to evaluate your current search and knowledge management capabilities, identify high-impact opportunities for Retrieval Augmented Generation (RAG) implementation, and calculate potential ROI and performance improvements.

Contact us today to learn how RBM Software can help you harness the power of LLM-powered search and RAG.